Wandsworth GP Federation hosted a Quality Event in May this year on the hot topic of digital clinical safety, hosted by Clinical Lead for Digital Transformation, Dr Devin Gray, and the Clinical Safety Officer at South West London ICB, Claire McAuley.

This case study captures the main points . The goal was to equip audiences with the understanding to identify, assess, and manage risks effectively when using digital tools in primary care.

Why Now? The Digital Transformation in General Practice

The landscape of primary care is rapidly evolving, with digital tools transforming how we deliver patient care. From online consultations to AI-powered scribes, these innovations promise efficiency and improved patient outcomes. However, with every technological advance comes a critical responsibility: ensuring patient safety.

The adoption of digital tools in primary care is experiencing an unprecedented surge. Consider the rapid shift: in Wandsworth, no practices were using online triage in 2020, and now two-thirds are using total triage, with only a handful not using any triage at all. Similarly, in 2023, automation tools were almost non-existent, but today, 89% of Wandsworth practices are leveraging at least one such tool.

This rapid adoption is driven by several factors:

- Mandated Tools: Online consultation tools (like Accurx & eConsult) and Digital Telephony (e.g., X-On, Daisy) are now a part of the GP contract.

- Rising Interest in Automation: Robotic Process Automation (RPA) tools are gaining traction for tasks to free up time in our administrative and secretarial teams, such as automated patient registrations (e.g., Healthtech-1), lab results/EPS processing (e.g., GP Automate, JifJaff), and long-term condition call/recall (e.g., Abtrace, Hippolabs).

- Emergence of Artificial Intelligence (AI): AI is making inroads with ambient AI scribes (e.g., Heidi, Tandem, Tortus, Anima) and document processing tools (e.g., BetterLetter, OneAdvanced) to improve quality of documentation and coding, save clinician time and improve patients’ experience of consultations.

Understanding Digital Clinical Safety: Principles and Responsibilities

What exactly is digital clinical safety? Digital systems are ubiquitous across the NHS, and while they often enhance patient safety through better integration and communication, they also introduce new risks. Misuse of these “digital products” or “Digital Health IT” can lead to patient harm via failures, design flaws, or human factors like incorrect usage. In essence, digital clinical safety is about managing the risk of harm to patients that could be caused by introducing digital technologies used to support direct patient care. The key principle is that the benefit must always outweigh the risk.

Clinically safe digital systems should:

- Be free from unacceptable risk.

- Be supported by evidence that demonstrates the risk is acceptable.

- Use controls that mitigate risk where necessary.

But how can you be sure the tool you’re implementing is safe? While the supplier has a role to play, your responsibility lies in ensuring your implementation is free from unacceptable risk. This risk management process needs to be considered throughout the lifecycle of the digital system: before going live, when the system goes live, during development, during business as usual, when new functionality is introduced, and even when the system is replaced.

The Role of the Clinical Safety Officer (CSO)

A Clinical Safety Officer (CSO) in NHS primary care is a registered and experienced clinician (doctor, nurse, or allied health professional) responsible for ensuring digital health technologies are used in a way that protects patient safety. Their role centres on clinical risk management, assessing potential issues, overseeing safety protocols, and ensuring compliance with national standards.

In primary care, the CSO acts as a bridge between clinical and technical realms, working with digital transformation teams, IT suppliers, and clinical staff to scrutinise new digital initiatives for clinical risks. This includes developing risk management strategies, documenting hazards, coordinating assessments, and keeping safety measures current.

Currently, most practices are adopting digital tools without any kind of formal risk assessment, let alone a trained CSO. However, a recent priority notification from NHS England’s Chief Clinical Information Officer was unequivocal about the need for deploying organisations to have this kind of training[1]. Even more recently, the Care Quality Commission published a ‘Mythbuster’ on the use of AI in Primary Care and again confirmed the requirement that GP practices must have a CSO if they’re procuring new digital tools[2]. I would therefore highly recommend a clinical lead within your practice (such as a GP partner) undertakes this one-day training as soon as possible.

The Safety Standards: DCB0129 and DCB0160

It’s important to be familiar with the digital clinical safety standards, as they are established in law through the Health and Social Care Act 2012.

- DCB0129: The supplier’s responsibility. This sets clinical risk management requirements for manufacturers of health IT systems[3].

- DCB0160: The practice’s responsibility. This sets clinical risk management requirements for the deployment and use of health IT systems (that’s us as GP practices)[4].

Every GP practice has a legal responsibility to meet the DCB0160 standards. When considering a new digital tool, you should ask the supplier for their Digital Technology Assessment Criteria (DTAC) which gives you a standardised summary of their compliance[5]. Additionally, inquire if they have example DCB0160 compliance packs you can review. Don’t hesitate to involve your federation or ICB to see if templates already exist or if at-scale pricing can be explored.

At its core, clinical safety is a proactive process. It’s not just about reacting when things go wrong but anticipating and preventing potential patient harms. While learning from near misses and significant events is important, the aim is to prevent them in the first place.

A Practical Approach to Digital Clinical Safety: Your Three Key Questions

At its heart, digital clinical safety comes down to three fundamental questions that map directly to the risk management activities of the DCB0160 standard:

- What can go wrong? i.e. risk analysis

- How bad could it be? i.e. risk evaluation

- What should we do about it? i.e. risk control

Let’s break these down.

- What Can Go Wrong?

When something goes wrong, it can lead to a hazard. A hazard is defined as “a condition that has the potential to cause harm.” Think of it like hazard lights on a car – no accident has happened yet, but the car is in a state that could lead to one.

Before a hazard occurs, there’s often a cause (the initiating event) that leads directly to an effect (the immediate consequence). The hazard, if unaddressed, can then lead to harm (the ultimate negative outcome for the patient).

Let’s look at some practical examples to illustrate these concepts:

| Accurx | ScriptSwitch | Heidi Health | |

| Cause | A patient submits an Accurx request using a different mobile number than the one saved in their record. | A distracted GP inadvertently approves and issues an auto-suggested medication change (e.g., bendroflumethiazide to indapamide) with two clicks. | The AI system provides a mistaken output (a “hallucination”), and the clinician overlooks this error before transposing it into the patient’s record. |

| Effect | The system defaults to responding to the registered mobile number, which the patient doesn’t receive. | The erroneous duplication of diuretic medication occurs in the patient’s regimen. | Erroneous transcription is recorded in the patient’s medical record and subsequently used in decision-making. |

| Hazard | The design of the communication system defaults solely to the registered mobile number, without accommodating updates provided in the incoming request. This is an “accident waiting to happen.” | The auto-suggest functionality in Scriptswitch presents a change in medication that can be approved too easily, especially when a clinician is distracted. | The reliance on automated transcription without robust verification safeguards creates a systemic vulnerability where errors could easily propagate. |

| Harm | Having not received a response, the patient assumes they don’t need to be seen, and their condition deteriorates, leading to hospital admission with urosepsis. (Note: The harm hasn’t happened yet in the hazard stage, but it’s the potential outcome if the hazard isn’t mitigated). | The patient becomes dehydrated due to the doubling of diuretics and collapses. | The patient later reads the entry on their NHS App and suffers significant distress and loss of trust, due to medication errors or incorrect personal details. |

Summary of terms:

- Cause: The initiating event or decision that starts the chain.

- Effect: The immediate consequence in the process; the deviation in the care pathway.

- Hazard: A potential source of harm to a patient; a latent vulnerability or design flaw that makes an error more likely or impactful.

- Harm: The ultimate negative outcome experienced by the patient, ranging from inconvenience to severe injury or death.

Hazard Workshop Techniques

Thinking about all the things that could go wrong can be overwhelming. This is where hazard workshops come in. These structured sessions, involving a small or large group, can help systematically identify potential hazards, their causes, effects, and potential harms. Some recognized techniques for systematically identifying hazards include:

- HAZID (Hazard Identification): A structured brainstorming technique using keywords like “none,” “wrong,” “late,” “incomplete,” or “duplicate” to consider safety consequences.

- FFA (Functional Failure Analysis): Takes a functional view of the system and considers potential safety consequences if a function is lost, wrong, or provided when not required.

- SWIFT (Structured What If Technique): A flexible group-based approach that combines brainstorming, structured discussion, and checklists to identify hazards, especially considering human and organizational factors.

For widely adopted tools, you often won’t be starting from scratch. You’ll likely review a hazard log already created by the supplier or another organisation, adding any specific considerations for your practice. This is still most effectively done in a workshop style, something we have done successfully in a remote format such as Microsoft Teams involving staff from multiple practices but could also be done in a practice meeting.

- How Bad Could It Be?

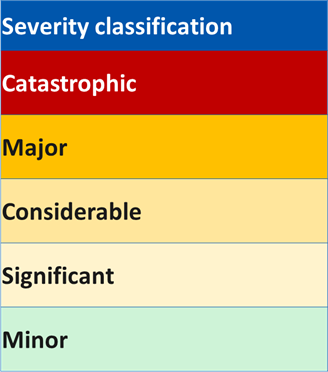

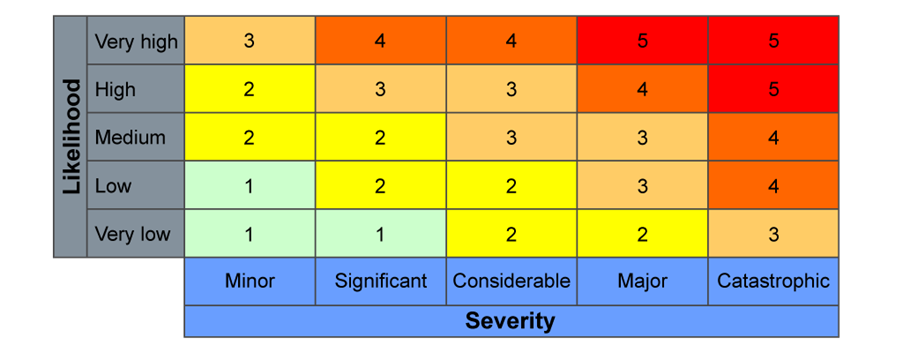

Once hazards are identified, the next step is to quantify the risk. This involves assessing two key factors for each potential harm:

- Severity: How serious would the harm be if it occurred?

- Likelihood: How probable is it that the harm would occur?

This helps us differentiate between risks. Missing a critically high potassium result, for example, has the potential for catastrophic harm (such as cardiac arrest and death), even if the likelihood of it being missed by a digital tool is low. Conversely, missing a borderline-low vitamin D result might have minor immediate severity, even if the likelihood of the tool misfiling it is higher.

Combining these two variables on a Clinical Risk Matrix (which you’ll typically see as a grid with severity on one axis and likelihood on the other) provides a risk score rating.

Anything with a risk score of 3 or above is generally considered undesirable. While you might still decide to accept these risks, it requires careful documentation and a clear rationale within your safety documents. This might be because the clinical benefits outweigh the risk, or perhaps the new system is still safer than the existing one. You’re only expected to go into detail for these undesirable risks.

This initial assessment gives us the initial/inherent risk – the risk before any additional controls are put in place by your practice. It’s the risk level when you “unbox” the tool for the first time.

- What Should We Do About It?

This is the final, crucial step: risk control. A control is what you (or the system) put in place to mitigate the risk of harm to the patient. Let’s revisit our examples and consider how controls can be applied:

| Accurx | ScriptSwitch | Heidi Health | |

| Reactive controls | Feedback to Accurx to ensure the default response is to the number the patient initiated contact with. Team awareness to double-check the response number. | Feedback to the medicines optimisation team to remove inappropriate switch recommendations. Reminders to GPs and pharmacists to check alerts carefully. | Staff are reminded to thoroughly check output for errors; clinician holds ultimate responsibility for what’s saved. |

| Pre-emptive controls | The system defaults to the number the patient contacted the practice with. | Scriptswitch pops up a more prominent “are you sure?” alert. | Templates can be edited to remove certain auto-generated comments (e.g., who is accompanying the patient). Warnings within the product that the AI may make mistakes. |

Types of Controls

Controls generally fall into three categories:

- Design Controls: Incorporated into the product itself (e.g., system defaults, in-built warnings, alerts). These are the strongest as they can eliminate or detect the cause of the hazard.

- Business Process Controls: Standard operating procedures that guide how staff use the system (e.g., staff matching registered mobile numbers).

- Training Controls: User guides and courses that educate staff on safe use (e.g., staff reminded to check AI transcription accuracy).

It’s important to remember that all controls have the potential to fail, so relying on a single control is risky. Safe systems will employ multiple controls, especially for high-risk scenarios. This concept is often referred to as the “Swiss Cheese Model,” where multiple layers of defence are needed, with holes in each layer that can sometimes align, leading to an incident.

The 4 Ts of Risk Control:

Another way to think about managing risk is using the “4 Ts”:

- Terminate: Avoid or eliminate the risk through design change (the most effective).

- Treat: Introduce additional control measures to reduce the likelihood or severity.

- Tolerate: Accept a level of risk after evaluating it (especially if the benefits outweigh the residual risk).

- Transfer: Hand over the risk to another party (e.g., the clinician taking responsibility for verifying AI output).

Most controls aim to reduce the likelihood of harm occurring rather than directly reducing the severity once it happens.

From Initial to Residual Risk

After implementing controls, you re-evaluate the risk to determine the residual clinical risk. This is the risk that remains after additional measures have been put in place to lower the likelihood and/or severity. For example, if an AI scribe initially has a “considerable” severity and “high” likelihood (risk level 3) due to potential hallucinations, implementing design controls (e.g., checking mechanisms, warning messages), process controls (e.g., mandatory clinician review), and training controls can reduce the likelihood to “low,” resulting in an “acceptable” residual risk (risk level 2).

What Makes Up the DCB0160?

All this risk analysis, evaluation, and control work needs to be documented. The DCB0160 standard requires four key documents, which should be stored together in a Clinical Risk Management File (e.g., a shared drive or Teams folder):

- Clinical Risk Management Plan (CRMP): This is your overarching document. It outlines your practice’s clinical risk management activities, identifies who is responsible for approvals, defines risk acceptability criteria, and specifies who will perform various tasks, like maintaining the Hazard Log.

- Hazard Log: This is a living document, typically an Excel spreadsheet, where you record and communicate all identified hazards, their causes, effects, harms, initial risk, existing controls, and residual risk. You should receive a copy of the supplier’s hazard log as a starting point. Your job is to scrutinize it and add any practice-specific considerations.

- Clinical Safety Case Report (CSCR): Think of this as your argument and evidence for the clinical safety of the digital tool you’re using. It provides confidence that the system will not pose unacceptable risks to patients. The manufacturer is legally mandated to provide you with their CSCR, which often outlines risks transferred to the clinician. This document also needs to be updated as the system or your use of it evolves.

- Incident Management Log: This is where you record, manage, and resolve safety incidents related to the new system. It doesn’t necessarily need to be a separate log; you can integrate it into your existing practice incident management system. The key is to log and learn from incidents, proactively making changes to prevent recurrence and sharing lessons learned with others.

Collaborative efforts are underway across London to create pre-populated templates for these documents, making it easier for practices to review, understand, and adapt them, thereby supporting digital tool adoption while improving safety and compliance. For instance, a first template DCB0160 for Heidi Health AI scribe has recently been developed by the Federation, offering a valuable starting point for practices considering such tools. The intention is to support the clinical safety process, but the practice takes ultimate responsibility for the accuracy, relevance, and implementation of the documents.

Clinical Safety in Practice: Collaboration and Learning

While digital clinical safety might seem daunting, you’re not alone. My hope is to create a community of practice (COP) of trained CSOs in Wandsworth so we can collaborate, critique, share and learn. There is no use in pretending that attending a one-day online course is going to make us safety experts. We need to work together to do this well.

This includes working with other partners, such as Claire McAuley, Clinical Safety Officer at South West London ICB who has been offering valuable input to the template DCB0160 documents we are creating. We are also working closely with suppliers to ensure they are providing the support where necessary for practices to meet their own DCB0160 compliance.

The ICB also hosts quarterly digital clinical safety meetings (currently focussed on online consultation tools) to discuss and disseminate learning from incidents. While these don’t replace your practice’s internal incident reporting, you can and should report any issues you experience with tools like Accurx or eConsult, as well as other digital tools where the learning could be applicable to other practices. You can find the reporting form here: https://bit.ly/SWLDigitalSafetyOCVC

Digital clinical safety is an ongoing process. We need to remember that the risks involved with introducing digital tools are not present at the point they are introduced. It is essential to audit, monitor, respond to feedback and learn from incidents (our own and those experienced by others) to ensure continuous improvement in patient safety. By understanding the core principles, asking the right questions, and utilising available resources, we can confidently and safely embrace the benefits that digital tools bring to our practices and ultimately to patient care.

Author: Dr Devin Gray, Clinical Lead for Digital Transformation at Wandsworth GP Federation

Resources

For clinical risk management training (including the CSO practitioner course) see: https://digital.nhs.uk/services/clinical-safety/clinical-risk-management-training.

NHS Digital Resources

- https://digital.nhs.uk/services/clinical-safety

- https://digital.nhs.uk/services/clinical-safety/documentation

- https://digital.nhs.uk/services/clinical-safety/applicability-of-dcb-0129-and-dcb-0160

Policy Guidance on Recording Patient Safety Events and Levels of Harm: https://www.england.nhs.uk/long-read/policy-guidance-on-recording-patient-safety-events-and-levels-of-harm/

Guidance on the Use of AI-Enabled Ambient Scribing Products: https://www.england.nhs.uk/long-read/guidance-on-the-use-of-ai-enabled-ambient-scribing-products-in-health-and-care-settings/

[1] https://www.gponline.com/gps-advised-stop-using-ai-scribes-without-key-assurances/article/1922151

[2] https://www.cqc.org.uk/guidance-providers/gps/gp-mythbusters/gp-mythbuster-109-artificial-intelligence-gp-services

[5] https://transform.england.nhs.uk/key-tools-and-info/digital-technology-assessment-criteria-dtac/